I was sick of downloading my shows manually, it actually takes up quite a bit of time especially if you add them up over the years. Before I had my server set up, I was running Deluge with the YaRSS2 plugin which works wonderfully well as long as my computer was turned on. (kind of a power hog)

http://dev.deluge-torrent.org/wiki/Plugins/YaRSS2

But since I have a low-power server now, I can let it run 24/7 without worries. Here’s my experience with it.

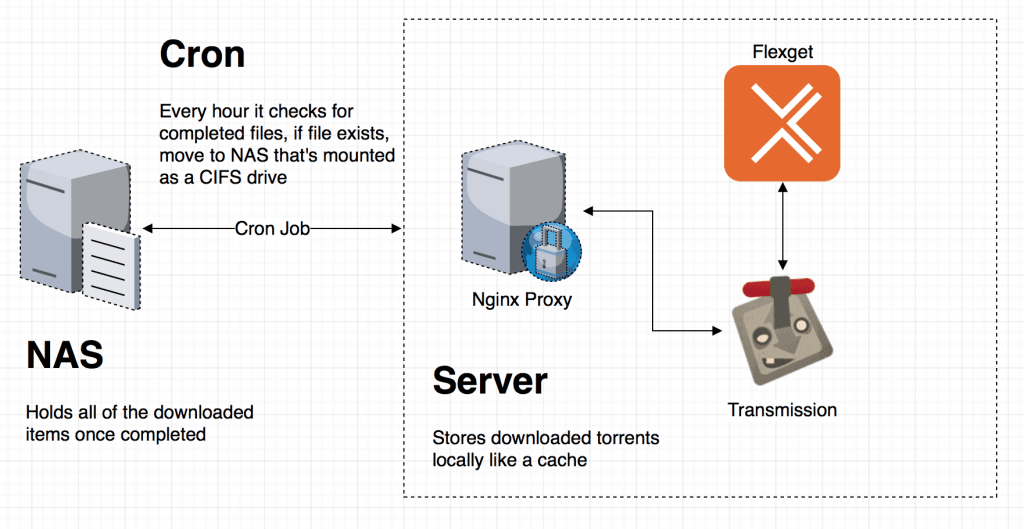

Before I get to the commands, here’s a quick breakdown of what’s happening.

Transmission is the torrenting client, plain and simple. I connect to it through the WebUI.

Flexget is the daemon that is querying the different RSS feeds set up in the config.yml file, it works like a rule-based regex search for the files and is able to detect quality settings from the file name. It also tracks the files that has been downloaded, rejected, or accepted but not downloaded yet. Upon matching a file, it will send a download job to transmission.

Using the kukki/docker-flexget image, here’s how I ran my Flexget container.

docker create --name flexget \ -v /home/log/configs/flexget:/root/.flexget \ -v /home/log/configs/transmission/watch:/torrents \ --restart=on-failure \ kukki/docker-flexget daemon start --autoreload-config

Transmission’s docker settings is a little bit more complicated as it’s the public-facing container where the nginx-proxy routes to. I’m using linuxserver/transmission image for this container.

docker create --name=transmission \ -v /home/log/configs/transmission:/config \ -v /home/log/data/torrents:/downloads \ -v /home/log/configs/transmission/watch:/watch \ -e VIRTUAL_HOST=[your-domain-here] \ -e TZ=GMT+8 \ -e PGID=1000 -e PUID=1000 \ -p 9091 -p 51413 \ -p 51413/udp \ -e VIRTUAL_PORT=9091 \ --restart=on-failure \ linuxserver/transmission

If you’ve noticed in the diagram, I said that the server stores the downloaded torrents as a cache. There are a couple of reasons for this setup. Firstly, I upgraded the server to an SSD so space is a premium now. But I already have a CIFS mount of my NAS, why not write directly to it?

From my experience running that configuration of downloaded straight into the NAS in the past year, it seems to screw up after a couple of months. Something about the buffer/network pipeline that just doesn’t like staying connected for such a long time and having data streamed to it on a regular basis. ISCSI would’ve worked perfectly but unfortunately, my NAS isn’t that high end.

I’m experimenting with this new configuration and hopefully, it stays stable for all the way.

- It first stores the download on the server’s memory on download

- Every hour a cron job will check if there are newly downloaded files

- If there are, move it to the NAS

My assumption is that a move command would be less taxing on the NAS than a constant write of data that may or may not be corrupted.

Why not use rsync? Because I fucked something up and the result is the same either way.

Hopefully, this will be useful for anybody reading it. Or myself in the future.